|

|

@@ -10,7 +10,7 @@

|

|

|

"The goal of this lab is to:\n",

|

|

|

"\n",

|

|

|

"* Review the scientific problem for which the Jacobi solver application has been developed.\n",

|

|

|

- "* Understand the run the single-GPU code of the application.\n",

|

|

|

+ "* Understand the single-GPU code of the application.\n",

|

|

|

"* Learn about NVIDIA Nsight Systems profiler and how to use it to analyze our application.\n",

|

|

|

"\n",

|

|

|

"# The Application\n",

|

|

|

@@ -21,17 +21,23 @@

|

|

|

"\n",

|

|

|

"Laplace Equation is a well-studied linear partial differential equation that governs steady state heat conduction, irrotational fluid flow, and many other phenomena. \n",

|

|

|

"\n",

|

|

|

- "In this lab, we will consider the 2D Laplace Equation on a rectangle with Dirichlet boundary conditions on the left and right boundary and periodic boundary conditions on top and bottom boundary. We wish to solve the following equation:\n",

|

|

|

+ "In this lab, we will consider the 2D Laplace Equation on a rectangle with [Dirichlet boundary conditions](https://en.wikipedia.org/wiki/Dirichlet_boundary_condition) on the left and right boundary and periodic boundary conditions on top and bottom boundary. We wish to solve the following equation:\n",

|

|

|

"\n",

|

|

|

"$\\Delta u(x,y) = 0\\;\\forall\\;(x,y)\\in\\Omega,\\delta\\Omega$\n",

|

|

|

"\n",

|

|

|

"### Jacobi Method\n",

|

|

|

"\n",

|

|

|

- "The Jacobi method is an iterative algorithm to solve a linear system of strictly diagonally dominant equations. The governing Laplace equation is discretized and converted to a matrix amenable to Jacobi-method based solver.\n",

|

|

|

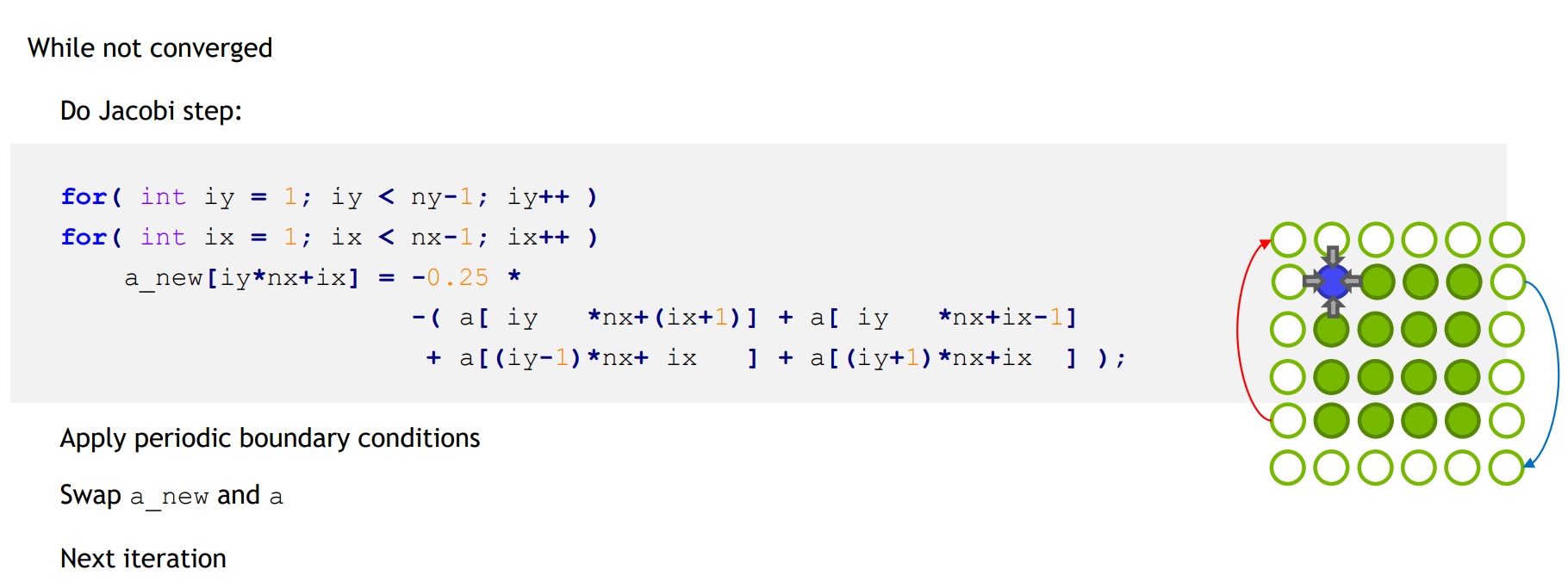

+ "The Jacobi method is an iterative algorithm to solve a linear system of strictly diagonally dominant equations. The governing Laplace equation is discretized and converted to a matrix amenable to Jacobi-method based solver. The pseudo code for Jacobi iterative process can be seen in diagram below:\n",

|

|

|

+ "\n",

|

|

|

+ "\n",

|

|

|

+ "\n",

|

|

|

+ "\n",

|

|

|

+ "The outer loop defines the convergence point, which could either be defined as reaching max number of iterations or when [L2 Norm](https://link.springer.com/referenceworkentry/10.1007%2F978-0-387-73003-5_1070) reaches a max/min value. \n",

|

|

|

+ "\n",

|

|

|

"\n",

|

|

|

"### The Code\n",

|

|

|

"\n",

|

|

|

- "The GPU processing flow follows 3 key steps:\n",

|

|

|

+ "The GPU processing flow in general follows 3 key steps:\n",

|

|

|

"\n",

|

|

|

"1. Copy data from CPU to GPU\n",

|

|

|

"2. Launch GPU Kernel\n",

|

|

|

@@ -39,7 +45,7 @@

|

|

|

"\n",

|

|

|

"\n",

|

|

|

"\n",

|

|

|

- "Let's understand the single-GPU code first. \n",

|

|

|

+ "We follow the same 3 steps in our code. Let's understand the single-GPU code first. \n",

|

|

|

"\n",

|

|

|

"The source code file, [jacobi.cu](../../source_code/single_gpu/jacobi.cu) (click to open), is present in `CFD/English/C/source_code/single_gpu/` directory. \n",

|

|

|

"\n",

|

|

|

@@ -49,12 +55,35 @@

|

|

|

"\n",

|

|

|

"Similarly, have look at the [Makefile](../../source_code/single_gpu/Makefile). \n",

|

|

|

"\n",

|

|

|

- "Refer to the `single_gpu(...)` function. The important steps at each iteration of the Jacobi Solver (that is, the `while` loop) are:\n",

|

|

|

+ "Refer to the `single_gpu(...)` function. The important steps at each iteration of the Jacobi Solver inside `while` loop are:\n",

|

|

|

"1. The norm is set to 0 using `cudaMemset`.\n",

|

|

|

"2. The device kernel `jacobi_kernel` is called to update the interier points.\n",

|

|

|

"3. The norm is copied back to the host using `cudaMemcpy` (DtoH), and\n",

|

|

|

"4. The periodic boundary conditions are re-applied for the next iteration using `cudaMemcpy` (DtoD).\n",

|

|

|

"\n",

|

|

|

+ "```\n",

|

|

|

+ " while (l2_norm > tol && iter < iter_max) {\n",

|

|

|

+ " cudaMemset(l2_norm_d, 0, sizeof(float));\n",

|

|

|

+ "\n",

|

|

|

+ "\t // Compute grid points for this iteration\n",

|

|

|

+ " jacobi_kernel<<<dim_grid, dim_block>>>(a_new, a, l2_norm_d, iy_start, iy_end, nx);\n",

|

|

|

+ " \n",

|

|

|

+ " cudaMemcpy(l2_norm_h, l2_norm_d, sizeof(float), cudaMemcpyDeviceToHost));\n",

|

|

|

+ "\n",

|

|

|

+ " // Apply periodic boundary conditions\n",

|

|

|

+ " cudaMemcpy(a_new, a_new + (iy_end - 1) * nx, nx * sizeof(float), cudaMemcpyDeviceToDevice);\n",

|

|

|

+ " cudaMemcpy(a_new + iy_end * nx, a_new + iy_start * nx, nx * sizeof(float),cudaMemcpyDeviceToDevice);\n",

|

|

|

+ "\n",

|

|

|

+ "\t cudaDeviceSynchronize());\n",

|

|

|

+ "\t l2_norm = *l2_norm_h;\n",

|

|

|

+ "\t l2_norm = std::sqrt(l2_norm);\n",

|

|

|

+ "\n",

|

|

|

+ " iter++;\n",

|

|

|

+ "\t if ((iter % 100) == 0) printf(\"%5d, %0.6f\\n\", iter, l2_norm);\n",

|

|

|

+ " std::swap(a_new, a);\n",

|

|

|

+ " }\n",

|

|

|

+ "```\n",

|

|

|

+ "\n",

|

|

|

"Note that we run the Jacobi solver for 1000 iterations over the grid.\n",

|

|

|

"\n",

|

|

|

"### Compilation and Execution\n",

|

|

|

@@ -135,7 +164,7 @@

|

|

|

"\n",

|

|

|

"# Profiling\n",

|

|

|

"\n",

|

|

|

- "While the program in our labs gives the execution time in its output, it may not always be convinient to time the execution from within the program. Moreover, just timing the execution does not reveal the bottlenecks directly. For that purpose, we profile the program with NVIDIA's NSight Systems profiler's command-line interface (CLI), `nsys`. \n",

|

|

|

+ "While the program in our labs gives the execution time in its output, it may not always be convinient to time the execution from within the program. Moreover, just timing the execution does not reveal the bottlenecks directly. For that purpose, we profile the program with NVIDIA's Nsight Systems profiler's command-line interface (CLI), `nsys`. \n",

|

|

|

"\n",

|

|

|

"### NVIDIA Nsight Systems\n",

|

|

|

"\n",

|

|

|

@@ -146,9 +175,9 @@

|

|

|

"\n",

|

|

|

"\n",

|

|

|

"The highlighted portions are identified as follows:\n",

|

|

|

- "* <span style=\"color:red\">Red</span>: The CPU tab provides thread-level core utilization data. \n",

|

|

|

- "* <span style=\"color:blue\">Blue</span>: The CUDA HW tab displays GPU kernel and memory transfer activities and API calls.\n",

|

|

|

- "* <span style=\"color:orange\">Orange</span>: The Threads tab gives a detailed view of each CPU thread's activity including from OS runtime libraries, MPI, NVTX, etc.\n",

|

|

|

+ "* <span style=\"color:red\">Red</span>: The CPU row provides thread-level core utilization data. \n",

|

|

|

+ "* <span style=\"color:blue\">Blue</span>: The CUDA HW row displays GPU kernel and memory transfer activities and API calls.\n",

|

|

|

+ "* <span style=\"color:orange\">Orange</span>: The Threads row gives a detailed view of each CPU thread's activity including from OS runtime libraries, MPI, NVTX, etc.\n",

|

|

|

"\n",

|

|

|

"#### `nsys` CLI\n",

|

|

|

"\n",

|

|

|

@@ -183,7 +212,7 @@

|

|

|

"\n",

|

|

|

"### Improving performance\n",

|

|

|

"\n",

|

|

|

- "Any code snippet can be taken up for optimizations. However, it is important to realize that our current code is limited to a single GPU. Usually a very powerful first optimization is to parallelize the code, which in our case means running it on multiple GPUs. Thus, we generally follow the cyclical process:\n",

|

|

|

+ "Any code can be taken up for optimizations. We will follow the cyclic process to optimize our code and get best scaling results across multiple GPU:\n",

|

|

|

"\n",

|

|

|

"* **Analyze** the code using profilers to identify bottlenecks and hotspots.\n",

|

|

|

"* **Parallelize** the routines where most of the time in the code is spent.\n",

|

|

|

@@ -215,17 +244,17 @@

|

|

|

"id": "6db3c3c7",

|

|

|

"metadata": {},

|

|

|

"source": [

|

|

|

- "Now, download the report and view it via the GUI. This is the analysis step. Right click on the NVTX tab and select the Events View.\n",

|

|

|

+ "Now, [download the report](../../source_code/jacobi_report.qdrep) and view it via the GUI. This is the analysis step. Right click on the NVTX tab and select the Events View.\n",

|

|

|

"\n",

|

|

|

"\n",

|

|

|

"\n",

|

|

|

- "Clearly, we need to parallelize the \"Jacobi Solve\" routine, which is essentially the iterative Jacobi solver loop. Click on the link to continue to the next lab where we parallelize the code using cudaMemcpy and understand concepts like Peer-to-Peer Memory Access.\n",

|

|

|

+ "Clearly, we need to parallelize the \"Jacobi Solve\" routine, which is essentially the iterative Jacobi solver loop. Click on the link to continue to the next lab where we parallelize the code using `cudaMemcpy` and understand concepts like Peer-to-Peer Memory Access.\n",

|

|

|

"\n",

|

|

|

"# [Next: CUDA Memcpy and Peer-to-Peer Memory Access](../cuda/memcpy.ipynb)\n",

|

|

|

"\n",

|

|

|

"Here's a link to the home notebook through which all other notebooks are accessible:\n",

|

|

|

"\n",

|

|

|

- "# [HOME](../../../introduction.ipynb)\n",

|

|

|

+ "# [HOME](../../../start_here.ipynb)\n",

|

|

|

"\n",

|

|

|

"---\n",

|

|

|

"\n",

|

|

|

@@ -263,7 +292,7 @@

|

|

|

"name": "python",

|

|

|

"nbconvert_exporter": "python",

|

|

|

"pygments_lexer": "ipython3",

|

|

|

- "version": "3.9.5"

|

|

|

+ "version": "3.6.9"

|

|

|

}

|

|

|

},

|

|

|

"nbformat": 4,

|