Переглянути джерело

Updating readme, adding images from run. Updating evaluate function to work with Together correctly

4 змінених файлів з 144 додано та 145 видалено

+ 130

- 131

getting-started/finetuning/vision/README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 14

- 14

getting-started/finetuning/vision/evaluate.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

getting-started/finetuning/vision/loss_curve.png

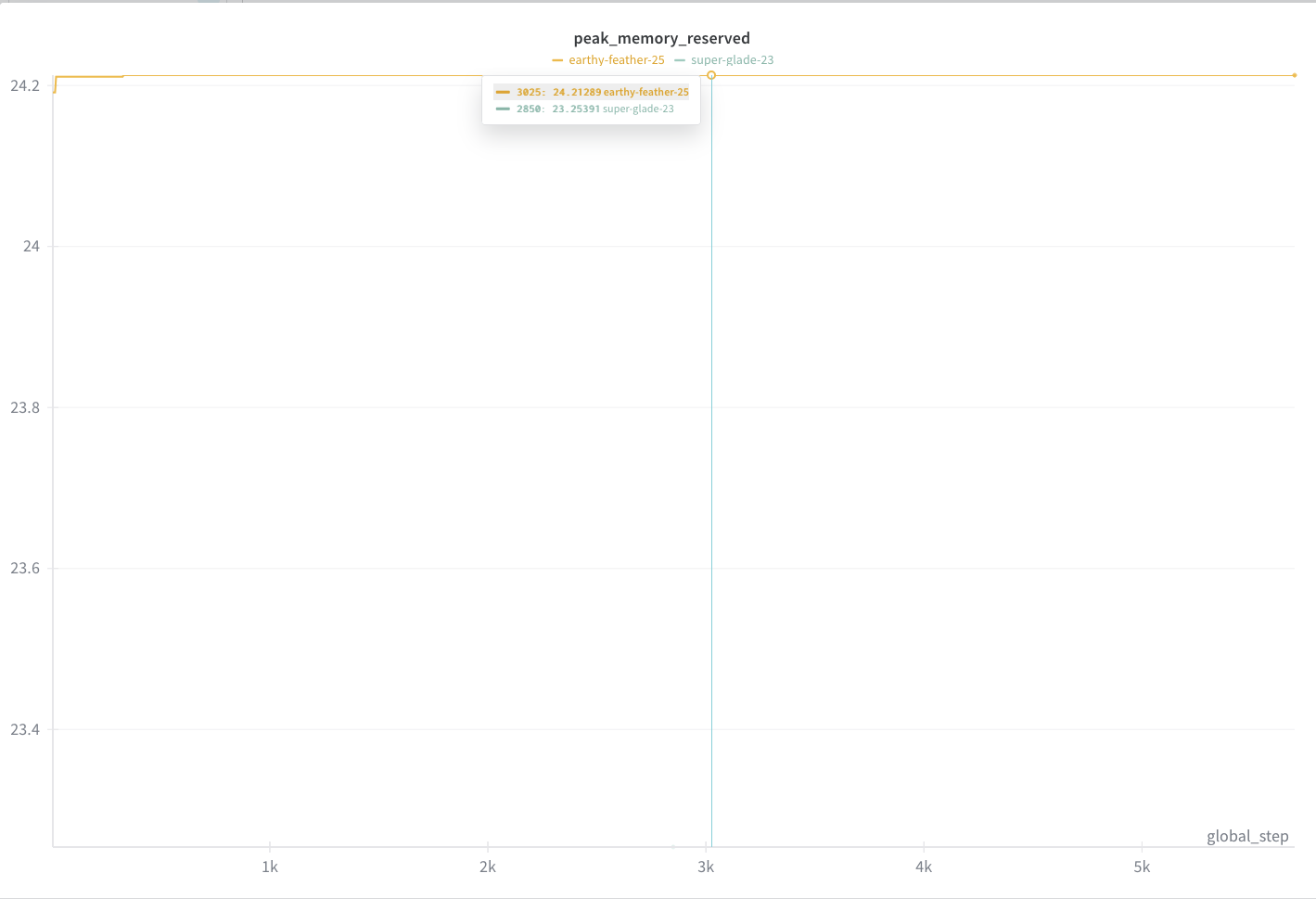

BIN

getting-started/finetuning/vision/peak_memory.png