Sfoglia il codice sorgente

Improve pseudocode

10 ha cambiato i file con 11 aggiunte e 10 eliminazioni

BIN

source-code/Pseudocode/Policy-Iteration/Policy-Iteration.png

+ 3

- 2

source-code/Pseudocode/Policy-Iteration/Policy-Iteration.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

source-code/Pseudocode/Value-Iteration/Value-Iteration.png

+ 1

- 1

source-code/Pseudocode/Value-Iteration/Value-Iteration.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

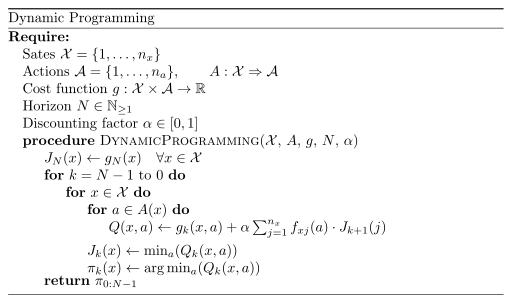

source-code/Pseudocode/dynamic-programming/dynamic-programming.png

+ 3

- 2

source-code/Pseudocode/dynamic-programming/dynamic-programming.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

source-code/Pseudocode/label-correction/label-correction.png

+ 3

- 4

source-code/Pseudocode/label-correction/label-correction.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

source-code/Pseudocode/q-learning/q-learning.png

+ 1

- 1

source-code/Pseudocode/q-learning/q-learning.tex

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||